Introduction

The U.S. Naval Air Warfare Center Aircraft Division (NAWCAD) Verification, Validation, and Accreditation (VV&A) Branch has developed and is executing a cost-effective, risk-based VV&A process for models and simulations (M&S) used to support the U.S. Department of Defense (DoD). The original version of the process was created more than 25 years ago by the predecessor to the VV&A Branch, the Joint Accreditation Support Activity, and has been further developed, used, and refined over the last 20 years in support of a wide variety of M&S domains and military systems. It is a systematic and straightforward way to determine whether a proposed M&S has the credibility to support its intended uses. This risk-based VV&A process equally applies to cases where accreditation is required and cases where formal accreditation is not required but verification and validation (V&V) is needed. It also applies to any M&S and test facilities that include live, virtual, and constructive simulations.

What Is Credibility?

Based on over 30 years of working in the field of M&S credibility and discussing what it means to various scientists, engineers, and mathematicians using the results of M&S for decision-making, the authors have concluded that M&S credibility is a function of the following three factors:

- Capability – the functions it models and the level of detail with which it is modeled should support anticipated uses.

- Accuracy – how accurate it must be should depend on the risks involved if the answers are incorrect.

- Usability – the extent of available user support should ensure it is not misused.

Any robust assessment of M&S credibility must consider not only accuracy but capability and usability. Capability is the characteristic that ties the M&S to the problem; it describes what the M&S needs to do to support the intended use. Accuracy describes how well the M&S solves the problem in terms of three elements—software accuracy, input/embedded data accuracy, and output accuracy. Usability ties the M&S to a useful solution by ensuring that it will not be misused. Credibility should be defined in terms of those three characteristics as follows:

M&S Credibility: The M&S has sufficient capability, accuracy (software, data, and output), and usability to support the intended use.

Naval Air Systems Command (NAVAIR) VV&A Process

The risk-based VV&A process starts with defining the intended uses of the M&S to support program decision-making. It continues with a detailed analysis of what is required for the M&S to satisfy those intended uses, how to demonstrate that those requirements have been met (or not), and what metrics will be used to measure the M&S against those requirements. A final check on the suitability of the M&S for the intended use is a risk assessment: What are the risks of using the M&S for the intended purpose given all that is known about its credibility once the VV&A effort is completed?

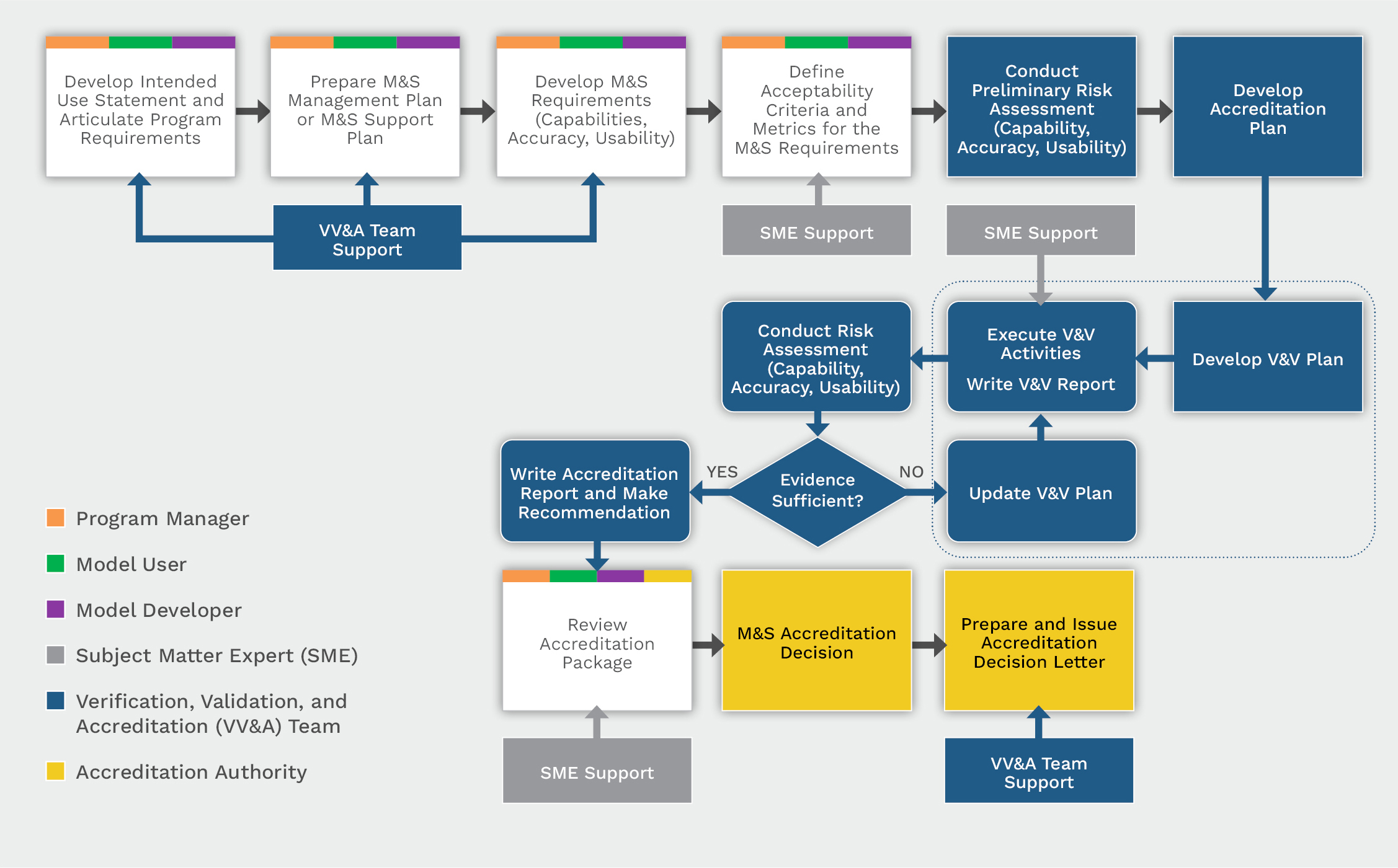

Figure 1 depicts an overview of steps in the process, with responsibilities assigned to the various organizations involved. The first and possibly hardest step in the VV&A process is to thoroughly articulate the intended use of the M&S for the application at hand (what questions will be answered using M&S outputs and how). The next step is to develop M&S and credibility requirements and acceptance criteria based on that specific intended use statement (SIUS) (what will the M&S need to do, how accurate will it be, and what information will the accreditor need to see to decide whether to accept it or not). The next step is to assess the risk of using M&S results for the intended use; the risk assessment identifies gaps in credibility that need filled and builds an accreditation case for the M&S. Those gaps form the basis of V&V and accreditation plans. Subject matter expert (SME) reviews of the information elicit SME accreditation recommendations and face validation results. The accreditation authority reviews the accreditation case and any residual risks before deciding to accept the risk of using the M&S, rejecting it, or accepting it with restrictions and/or workarounds. In the next few sections, an example for a six degree of freedom (6-DOF) flight simulation of a long-endurance, unmanned aerial vehicle will be presented.

Figure 1. NAVAIR Risk-Based VV&A Process Overview (Source: NAVAIR VV&A Branch).

SIUS

The purpose of the SIUS is to state the program’s goals for the M&S concisely and completely, describe a potential M&S user’s needs and questions, and explain how M&S might help meet those needs. An SIUS must be developed in enough detail so that accreditation requirements can be determined (general statements of intended use are insufficient) [1]:

Carefully define the specific issues to be investigated by the study and the measures of performance that will be used for evaluation. Models are not universally valid but are designed for specific purposes…A great model for the wrong problem will never be used…

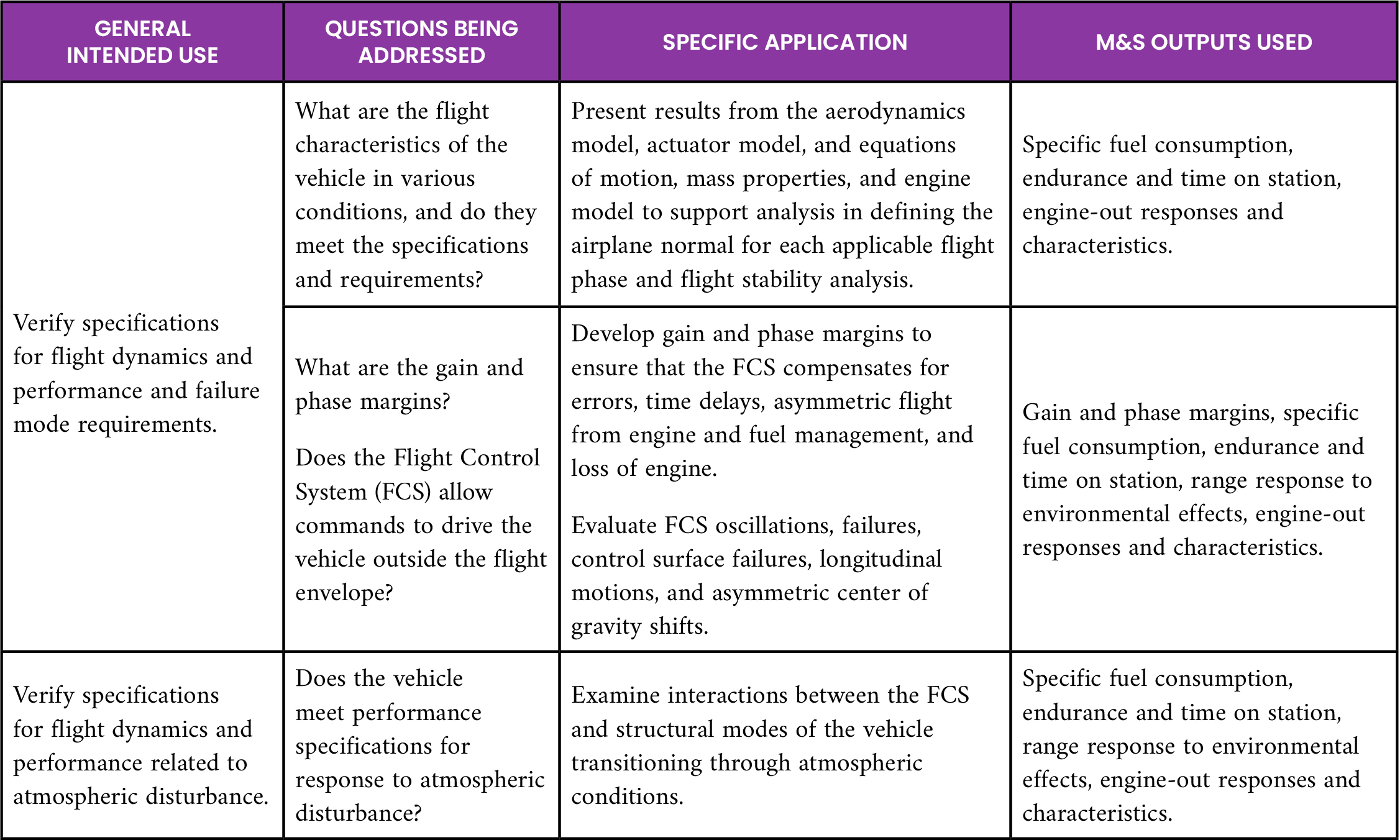

Table 1 shows an example from an SIUS for a 6-DOF flight simulation.

Table 1. Air Vehicle 6-DOF Flight Simulation SIUS

M&S Accreditation Requirements, Acceptability Criteria, and Metrics

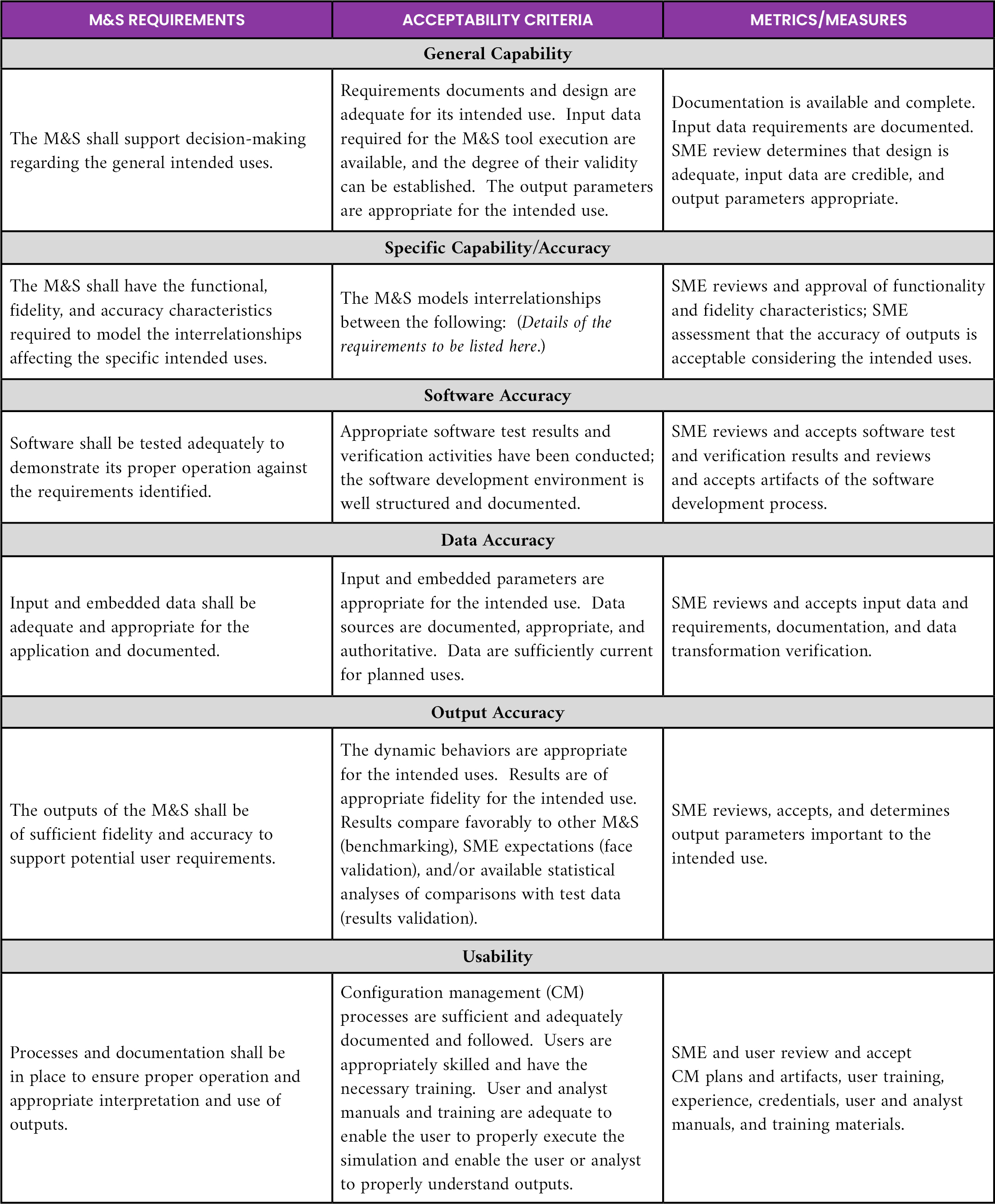

Whether an M&S is credible and hence acceptable for an application (intended use) is determined by how well it meets the requirements of that intended use. For VV&A, requirements, acceptability criteria, and metrics/measures are defined as follows:

- M&S Requirements: What features and characteristics does M&S need to support the intended use?

- Acceptability Criteria: What quantitative or qualitative properties must M&S have to meet the requirements for intended use?

- Metrics/Measures: How will it be determined whether the acceptability criteria are met?

The best way to define M&S requirements, acceptability criteria, and metrics is in terms of the three components that define M&S credibility—capability, accuracy (software, data, and outputs), and usability. How well the M&S meets those requirements must be determined by an assessment method, with criteria identified as to how the user will decide if it passes or fails. These three key credibility components determine the M&S features needed to satisfy the intended uses. Note that output accuracy is a comparison between M&S outputs and a representation of the real world; the real world can be represented in three ways—benchmarking (comparing with another M&S of known credibility), face validation (comparing with SME opinions on how the system being simulated behaves in the real world), and results validation (comparing with test data).

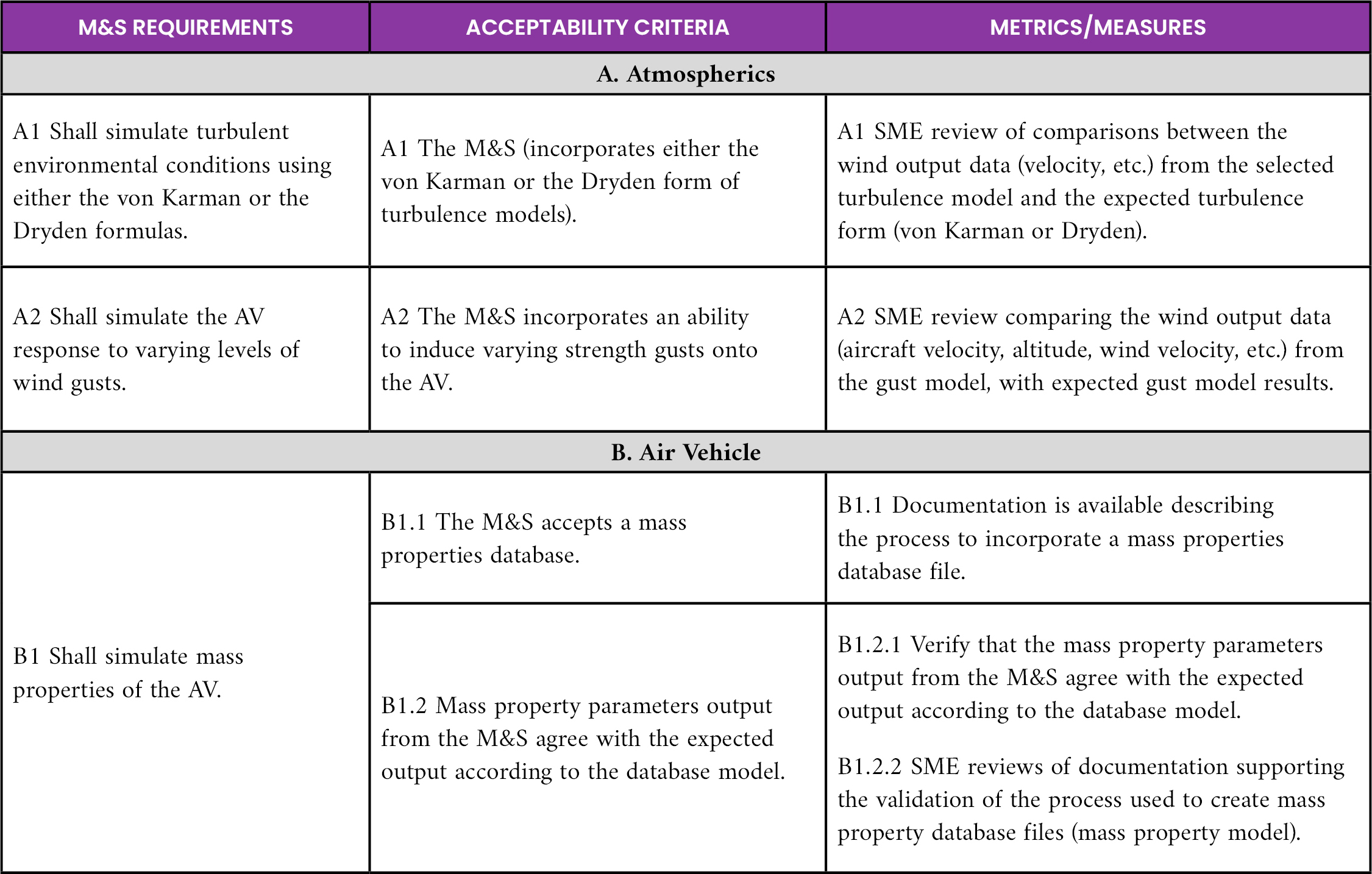

Developing the details of the requirements, the acceptability criteria for each requirement, and the metrics and measures to evaluate the M&S against those acceptability criteria requires working with M&S developers and users (such as the M&S integrated product team [IPT] lead). Requirements for VV&A activities are developed from the SIUS, the acceptability criteria, and metrics—i.e., how much credibility information is required to demonstrate whether the M&S meets the intended use depends on what is required to show if it meets the requirements (acceptability criteria), how it is measured (metrics/measures), and the risks associated with incorrect results. Table 2 shows a summary of the types of criteria and metrics used. Table 3 shows an example of part of a matrix of M&S requirements (acceptability criteria and metrics for the same air vehicle [AV] 6-DOF flight simulation previously discussed).

Table 2. Overview of M&S Requirements, Acceptability Criteria, and Metrics [3]

Table 3. Partial Table for Air Vehicle 6-DOF [3]

Risk Assessment

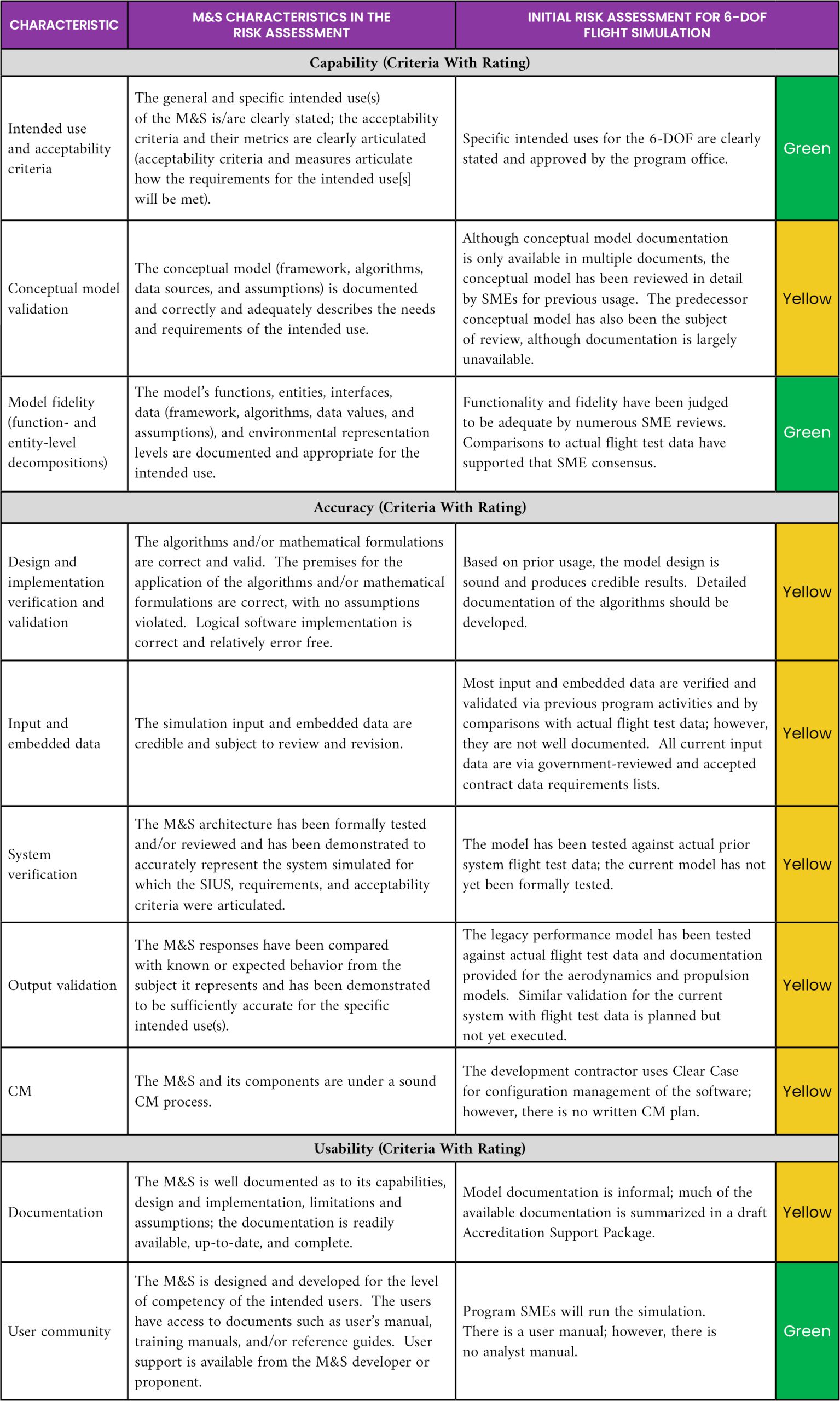

Based on the available credibility information (including V&V results), a formal risk assessment process is used to evaluate the risk associated with making an accreditation decision. A set of 10 characteristics of M&S has been developed under the three categories of M&S credibility (capability, accuracy, and usability). The M&S risk is evaluated for the intended uses by reviewing the available credibility evidence in each of those 10 areas. Table 4 shows these characteristics and the criteria for a “green” rating in each. Table 5 illustrates the results of the risk assessment of the 6-DOF flight simulation as an example of applying the risk assessment process; the overall risk was assessed as “moderate.” An operational risk assessment is also conducted to determine the effects of errors on various parameters important to the intended use.

Table 4. M&S Characteristics Risk Assessment Criteria and Results Summary

Table 5. Example Verification Techniques

Plan V&V to Reduce Risk

Preliminary risk assessments, which result in an initial “gap assessment” of the available credibility information on the M&S, are conducted to focus V&V-related efforts on the gaps identified and the risks to the intended uses associated with those gaps. This process leads to identifying requirements for additional information to be collected or generated to reduce those risks. These information requirements are then compared with any additional available information, and a list of credibility “shortfalls” is compiled. Each element of this list is then evaluated for its impact on risk. Unmet requirements for simulation credibility that have acceptable (i.e., low risk) workarounds are removed from the list. Unmet requirements for simulation credibility that have no acceptable workarounds generate a requirement for more detailed information in the appropriate category. This may include additional V&V and software testing and documentation, collecting additional test data, creating (and implementing) a CM plan, establishing new user support functions, or enhancing M&S functionality to meet the application requirements.

Activities required to generate this information are then included in the accreditation and V&V plans. The program manager (PM) wanting to use the M&S will have to provide the resources necessary to generate that information. Alternatively, if the PM cannot provide more funding or chooses not to do so, the PM can choose to use the M&S with the amount of evidence available and accept a higher level of risk. Some of the recommended activities for the example 6-DOF M&S are evident in Table 4; others were based on a list of recommended activities to support reducing an overall “moderate risk” assessment to “low risk” [2].

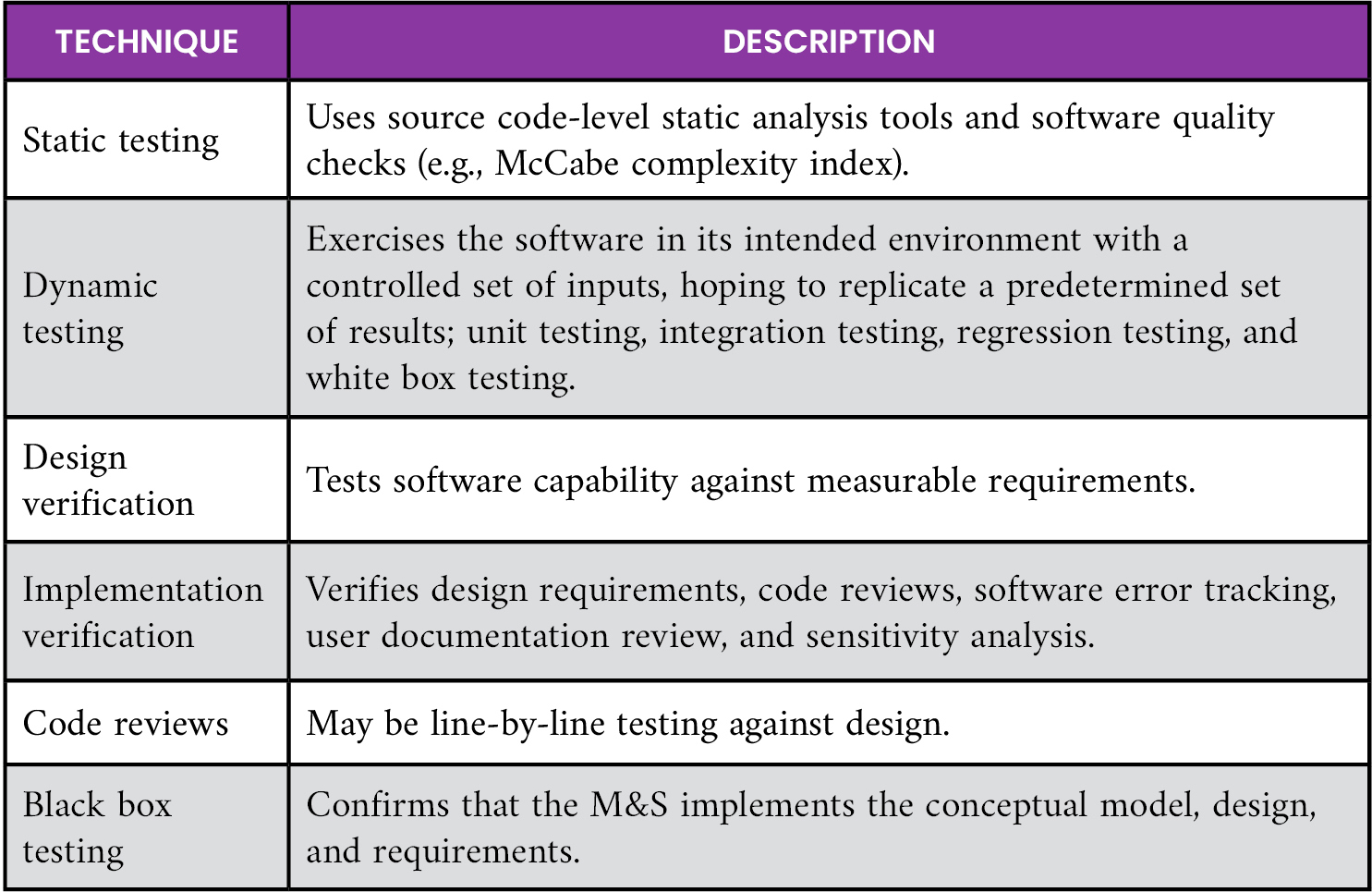

Conduct V&V and Other Credibility Activities

Once the accreditation and V&V plans have been developed and based on the preliminary risk assessment, the activities in those plans are implemented. Verification activities include determining and documenting whether the SIUS is correct and appropriate for current application; determining if defined capability, accuracy, and usability requirements are correct and complete for the SIUS; and determining if the capability, accuracy, and usability implementations are correct and appropriate per conceptual and design specifications and standards. Table 5 lists some example verification activities.

Validation activities include conducting and documenting data V&V checks, comparing simulation output/results to measured data (results validation) and/or an existing validated M&S output (benchmarking), and face validation (SME review). Sensitivity analysis can be a powerful tool supporting face validation reviews, developing requirements for validation test data, and analyzing M&S data against test results. Statistical analysis techniques play an important role in M&S output comparisons with test data. Some techniques that should be considered include Bayesian statistics, testing for intervals, and goodness of fit approaches like the Chi-Square, Kolmogorov-Smirnov (nonparametric), and Fisher’s Combined Probability tests.

SME reviews of V&V data resulting from the 6-DOF V&V effort were conducted. The SMEs represented several interested organizations, including the unmanned aerial vehicle (UAV) program office, their support contractor (who was also running the 6-DOF as part of development), independent SMEs, and one or two representatives of operational test and evaluation organizations who would have vested interests in the UAV program later in its development. The reviews resulted in some recommendations for further activities (which were planned for the next iteration of UAV development), considerable discussion of the technical merits of the 6-DOF and V&V results, and a consensus that the 6-DOF met the acceptability criteria for its intended use.

Update Risk Assessment and Iterate the Process as Needed

The VV&A process is iterated as necessary by updating the risk assessment, as tasks are completed in accordance with the V&V and accreditation plans. After all necessary iterations are completed, a “Final Risk Assessment” is developed and documented using the 10 M&S characteristics shown in Table 4. That final assessment determines the residual risk associated with applying the M&S to the intended uses after all V&V and other accreditation activities have been completed. That residual risk assessment, along with accompanying recommendations, is provided as supporting information to the accreditation authority. The final risk assessment for the 6-DOF M&S after all V&V activities were completed was “low.”

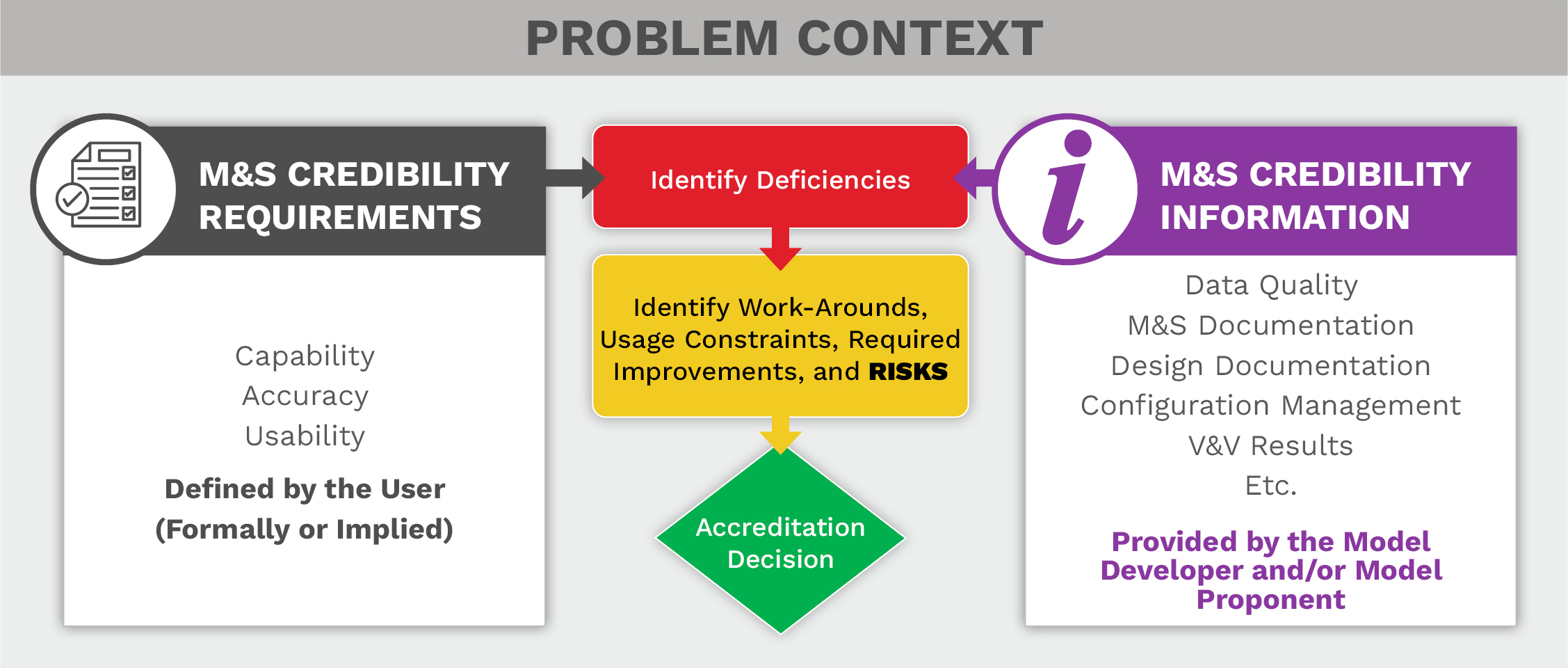

Accreditation Assessment, Package, and Report

All metrics are ultimately adjudicated by SME review, making use of all information obtained and/or developed during the V&V process, which may include extensive comparisons to test data, benchmarking against other simulations, or comparing to a SME’s perception of the real world. An accreditation decision ultimately relies on the accreditation agent/team leveraging personnel who know the subject matter to make a recommendation (based on the information available) as to whether the tool meets the requirements and can satisfy the intended uses and what should be done about it if it does not. Accreditation should be based on an objective comparison of the known credibility information with the credibility requirements, as shown in Figure 2. Based on that comparison, the accreditor can decide to accept the residual risk, require workarounds for risk areas or improvements to the M&S, or not accredit it at all.

Figure 2. The Essence of Accreditation (Source: NAVAIR VV&A Branch).

The results of the V&V efforts and the accreditation recommendations are documented in a tailored MIL-STD-3022 [3] format. MIL-STD-3022 describes the standard formats for accreditation plans and reports and V&V plans and reports. The accreditation recommendation is documented in a letter from the M&S proponent (e.g., the M&S IPT lead) to the accreditation authority (the PM for program-related M&S uses), who is the final decision-maker and ultimate user of the M&S results as described in the SIUS. Based on all the work accomplished by the VV&A team and the 6-DOF developer, it was recommended that the 6-DOF be fully accredited to support its intended use.

Conclusions

This systematic VV&A process consists of determining, verifying, demonstrating, testing, and documenting whether the M&S requirements, acceptability criteria, and associated metrics and measures have been satisfied correctly. Because the M&S requirements are determined and defined from the SIUS, PMs, operational testers, and M&S users can have confidence in knowing whether the M&S has the credibility necessary to adequately support its intended use. This process applies to all M&S and test facilities, including live, virtual, and constructive simulations. What makes this process cost-effective is that any V&V activities are focused on requirements driven by the intended use; no V&V activities are conducted that do not directly support the requirements of the SIUS.

References

- Law, A. M. Simulation Modeling and Analysis. 5th edition, McGraw Hill, ISBN 978-0-07-340132-4, p. 240, 2015.

- Naval Air Warfare Center. “Information Requirements In Support of Accreditation Volume II of the Accreditation Requirements Study Report.” Computer Sciences Corporation for the Susceptibility Model Assessment and Range Test (SMART) Project, JTCG/AS-93-SM-20, Weapons Division, China Lake, CA, February 1994.

- U.S. DoD. Department of Defense Standard Practice: Documentation of Verification, Validation, and Accreditation (VV&A) for Models and Simulations. MIL-STD-3022, 28 January 2008.

Biographies

David H. Hall works for the SURVICE Engineering Company as the chief analyst for VV&A and analysis support services under contract to the NAWCAD. Mr. Hall holds B.S. and M.A. degrees in mathematics from California State University at Long Beach.

David J. Turner is the Patuxent River area operation manager for the SURVICE Engineering Company, supporting a wide variety of M&S VV&A programs at NAWCAD. Mr. Turner holds a B.S. degree in aerospace engineering from Pennsylvania State University.