Introduction

This article explores several emerging applications of artificial intelligence (AI) and machine learning (ML) for U.S. Department of the Navy (DON) energy autonomy and digital transformation use. It also summarizes relevant research and development efforts carried out by the University of Dayton Research Institute (UDRI) in a contract with the Naval Facility Engineering and Expeditionary Warfare Center (NAVFAC EXWC).

Background

U.S. Navy facilities have contributed a considerable fraction to the total energy consumption in the defense sector. To reduce energy costs at these facilities, a series of research, development, and demonstration programs related to distributed and renewable generation, energy storage, and energy efficiency technologies have been accomplished in the past decades [1–5].

Energy systems at installations typically contain a variety of components connected to power-critical missions or facilities. Traditionally, controls and optimization of energy systems at installations are addressed at the component levels (e.g., generator controllers, battery controllers, etc.). Solutions such as microgrids can provide improved resilience and performance [3–5], but challenges still exist in a highly autonomous operation condition. For example, it is challenging to achieve real-time, system-wide energy optimization that can consider a look-ahead time horizon and prediction of different factors like load profiles, renewable energy availability, fuel/electricity prices, etc.

Even in cases of parallel operation, multiple generators can operate at their full or partial capacities, leading to varying fuel efficiencies. For instance, the generators will need to adapt to different scenarios supporting the voltage or sharing active power or reactive power, particularly when the mobile generators are being rapidly deployed and connected in unknown situations [5]. Also, load shedding is expected to be based upon a dynamic priority level, which depends on the operation data, scenarios, or user preference. Therefore, the enhanced situational awareness about generator fuel efficiency, load patterns, and the entire microgrid is essential to more efficient utilization of all generation and storage assets. However, traditional control solutions do not capture or address all these factors effectively and may result in inefficiency and other issues (e.g., adaptability or resilience) in real-time operation.

With the advances in sensor technologies, it is possible to install many cost-effective sensors in distributed power plants and load centers and collect and visualize the big data for hundreds or even thousands of parameters and variables [6, 7]. But a question remains on how we could use these large data sets to help improve energy generation/utilization efficiency and reduce energy costs. Since multiple complex energy conversion and flow processes exist in these energy systems, it is necessary to first figure out what data sets are most important and effective in generating energy savings and how they can be utilized to optimize operations before real benefits can be obtained. Therefore, modeling the variety of energy flow processes, understanding the options and impact of potential energy-saving technologies, and even automating energy saving processes are very important for facility managers to determine action plans for strategical facility upgrade and achieve improved autonomous operation practice.

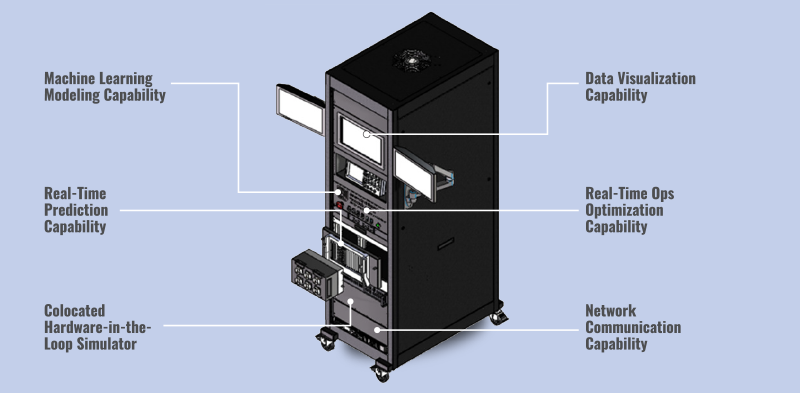

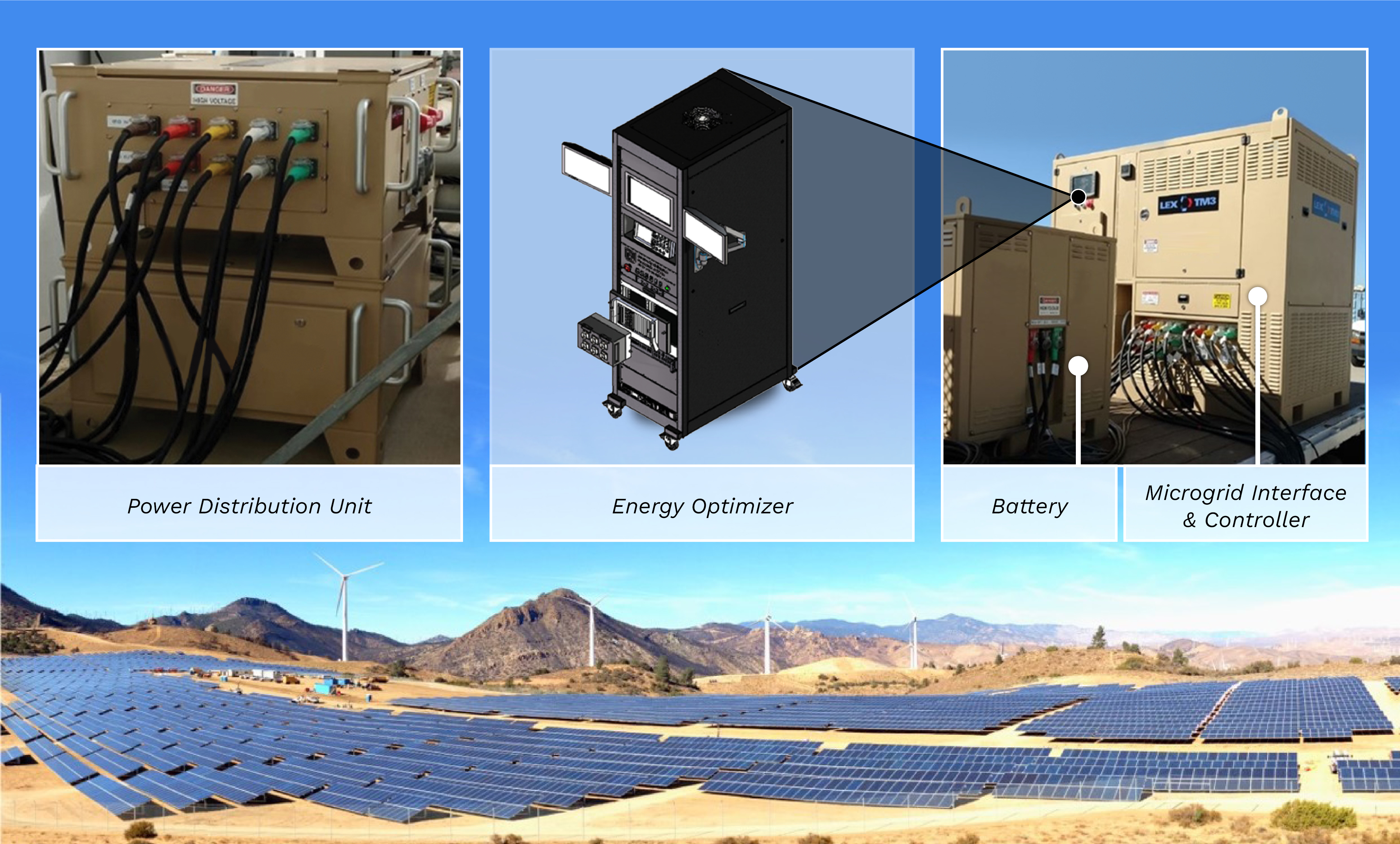

To address these challenges, the UDRI team recently proposed a unique solution that combines the benefits of data-driven Bayesian neural networks with a physics-guided learning framework where probabilistic weights are considered for learnable parameters [8–10]. This novel learning capability has been developed to enhance the predictive analytics and control system for microgrid operation (as shown in Figure 1) in a contract with NAVFAC EXWC. Specifically, the system under development aims to optimize the operation of generators of different types, energy storage, and controllable loads at the system level in a resilient manner by leveraging the latest advances in ML, data analytics, predictive control, and real-time computing. This solution may help improve the energy forecasting accuracy, reduce energy costs across the Navy shore establishment, and reduce redundant equipment and DON new equipment orders.

Figure 1. Capabilities of an ML-Driven Energy Optimizer Under Development (Source: Z. Jiang, S. C. Miller, and D. Dunn, Adapted From a Concept Design Art Image Codesigned by Advint LLC).

Features of ML Analytics

In the general field of AI, the current practice of ML aims to search nonlinear functions between the input and output variables to fit training data samples and update the weights and biases iteratively by using the gradients calculated from the errors between the predicted and labelled values [11–18]. This process targets seeking unknown or hidden correlations and patterns from training data in an implicit manner. Because of this, the current ML paradigm has many limitations [14–18]. For instance, the iterative updating of weights/biases is fragile, relies on the gradients, and may not accurately reflect general correlations [14]. The classical training process is time-consuming and may sometimes result in overfitting or poor performance [15]. Typical point-to-point predictions based on fixed values of the learned weights may not sufficiently capture the variations or uncertainty in the model parameters [16]. Slight variations in the input may also lead to large deviations in the predictions or wrong output results [17]. Probabilistic solutions exist, but they typically assume Gaussian distributions [18].

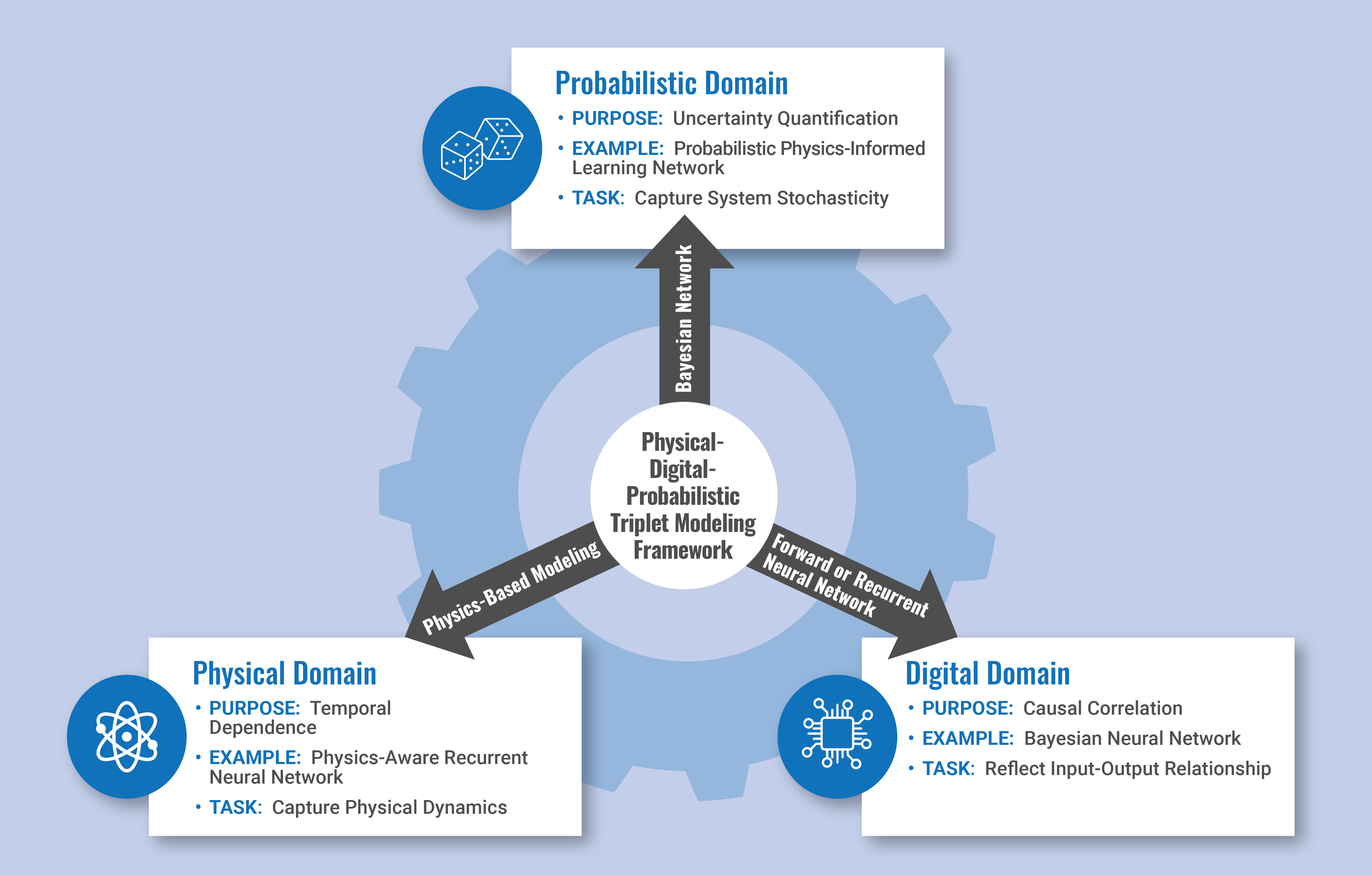

The unique solution recently developed at UDRI is a unified, data-driven, predictive modeling and control method for energy systems. This solution is a physics-guided, Bayesian neural-learning framework with probabilistic weights for learnable parameters in the networks. This approach can account for our prior physical knowledge, operational data, and uncertainty in the model altogether to gain insight into the energy systems’ behavior. Basically, this learning framework can (1) model the causal relationships between cause/effect factors (i.e., input/output variables) in energy systems, (2) learn the system dynamics or temporal dependence from operational data, and/or (3) characterize the uncertainty or variability in the dynamic trends or parameters, as illustrated in Figure 2. To facilitate digital transformation, our three-pronged approach is a physical-digital-probabilistic triplet modeling framework for industrial processes and dynamic systems. This learning capability can thus be leveraged to enhance predictive analytics and optimal control strategies in planning, operating, and maintaining autonomous energy systems. Generally, application scenarios of this capability may include (1) predicting renewable power generation, load profiles, and cost/price trends; (2) dynamic model learning and calibrating the energy system; (3) quantifying uncertainty in stochastic energy production/consumption for asset planning or scheduling; and (4) real-time optimizing autonomous energy system operations for improved energy efficiency, quality, and resiliency.

Figure 2. Three-Pronged Approach to the Physical-Digital-Probabilistic Triplet Modeling Framework for Industrial Processes and Dynamic Systems (Source: Z. Jiang, S. C. Miller, and D. Dunn).

The effort in the NAVFAC EXWC-UDRI partnership includes designing, developing, testing, and evaluating a suite of data-driven, ML-based models for energy prediction and an open-architecture, ML-enabled predictive control system. The outcomes from this work can be used to predict and optimize energy production/consumption in microgrids or facility energy systems in real-time. Such energy systems may typically contain distributed or renewable generation (e.g., solar or wind power), energy storage (e.g., batteries), and controllable load. Key capabilities developed in this effort include the following, as also illustrated in Figure 1:

- Data-driven, ML-based modeling ability for energy systems with varying temporal, probabilistic, and categorical characteristics.

- Real-time energy prediction capability considering time-domain dynamics, uncertainty quantification, and causal relationships.

- Real-time operation optimization functionality.

- Real-time hardware-in-the-loop (HIL) simulation with hybrid physics/learning-based models.

The capabilities developed in this effort can be leveraged to apply the digital engineering and model-based systems engineering approaches to other technology projects that involve complex systems of systems [19]. For instance, these technologies can be transitioned to the U.S. Navy’s installation energy infrastructure (such as naval base facilities and microgrids), shipboard power systems, naval aviation operational energy systems, naval logistics, and/or naval enterprise systems. Further, as options for future development and applications, those developed ML methods can be used in multiple stages of modeling and real-time HIL simulation to achieve the following:

- Learn and validate a compact representation (e.g., a recurrent neural network-based model) of complex components or systems from offline operational data.

- Update and calibrate the model with online operational data (i.e., online learning capability).

- Learn the uncertainty in the model parameters/dynamics and consider the probabilistic variations and contingencies in the prediction.

- Accelerate the real-time simulation and HIL testing with compact learning-based models (rather than complicated, compute-intense, physics-based models) for some components.

ML-Enabled Energy Optimizer

The effort performed by UDRI for a NAVFAC research, development, test, and evaluation contract laid out a foundation for system design, hardware prototype, software architecture, algorithms for model learning and predictive optimization, and HIL simulation models, as highlighted in Figure 1. The energy optimizer’s “learning from operational data” capability and look-ahead prediction mechanisms, designed for considering opportunistic optimization options to reduce costs, are what make it innovative. This solution also improves data security and privacy by aggregating and embedding actionable intelligence about operation data into the learned models and only communicating the model structures and parameters over the network to a microgrid controller.

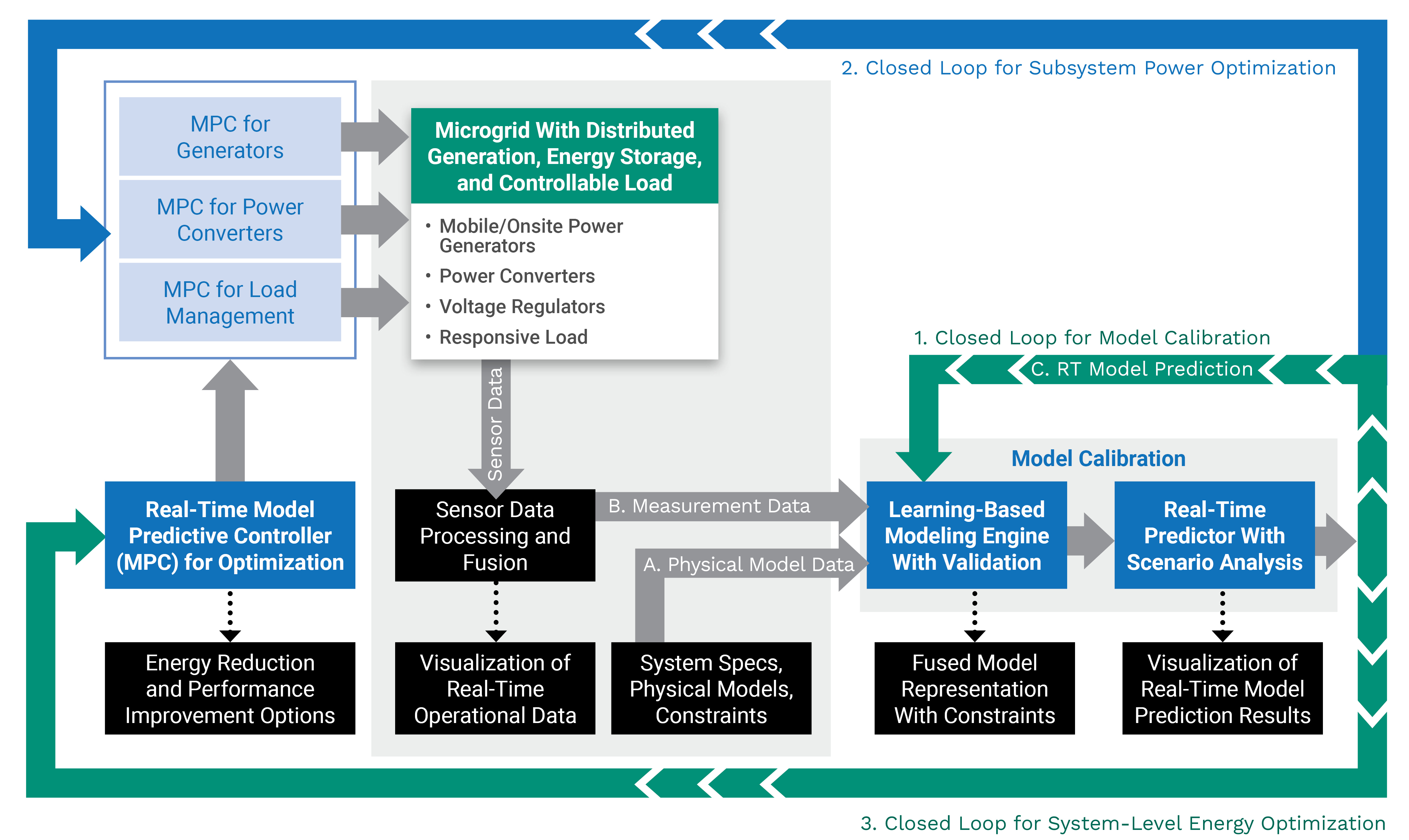

The control system, based on ML and model predictive control (MPC), can dynamically integrate appropriate asset models (including generators and loads) into a real-time energy optimizer and generate optimized control actions for each individual asset, as shown in Figure 3. This system consists of three closed loops for model calibration, equipment-level power optimization, and system-level energy optimization. The controller is scalable and adaptive, building on open-architecture communication, and can be implemented on real-time processors and field programmable gate arrays [20]. The proposed solution considers different asset configurations and types and uses real-time operational data to learn and model various energy phenomena. More importantly, these models will be used in a real-time, learning-enabled energy optimizer (through a model predictive control method) and incorporated into the online operation to optimize the configurations and operational settings. To achieve this, the control system has multiple novel components connected in closed loops: (1) a learning-based modeling engine coupled with (2) a real-time predictor with scenario analysis for model calibration and (3) a real-time two-layer model predictive controller for optimization. It takes model data (e.g., model structure/parameters) as input and generates optimal control actions or setpoints for multiple generators or loads as output.

Figure 3. Block Diagram of the Learning-Driven MPC System (Source: Z. Jiang, S. C. Miller, and D. Dunn).

The control system has a hierarchical MPC structure (the outer two loops), with a higher-level MPC generating commands for multiple assets and a lower-level MPC managing each individual resource. The multilayer framework can decompose the control of the entire power system into layers or pieces of control territories that can be easily and efficiently managed and coordinated. In this control framework, the higher-level MPC optimizes the energy losses and costs subject to dynamic load profiles and also maintains a dynamic level of reserved energy in storage to meet future power changes. The lower-level MPC optimizes power management, i.e., regulating the currents while controlling the bus voltage. This way, the power generators are not necessarily configured to meet peak power demand but just average demand.

For example, an initial configuration of a small-scale microgrid might consist of a fuel-based, distributed generation system (e.g., diesel engine or gas turbine-driven generator), a battery energy storage system, renewable power sources (e.g., a solar generation farm), and controllable load banks. These assets can connect with the utility grid and operate subject to time-of-use pricing signals. The solar power generation may be operating at a maximum power point tracking mode, where the output power varies and depends upon the solar irradiance. The generation costs (or energy conversion efficiency) of the fuel-fired generator may change with its output power. The energy storage system can be charged and discharged with constraints, and power losses may vary at different rates. The energy losses in both the power generation and storage processes can be learned from historical and real-time operational data through ML approaches. The load can be categorized into critical load and noncritical load, the latter of which can be temporarily reduced or turned off.

Applications of ML Methods

The ML methods can be widely used in a model-based, systems-engineering approach. These powerful enabling techniques can serve as a driving force for the general trend of the latest digital engineering transformation [19] and require the digital interconnectedness of tools, models, and data necessary for mission success. Several examples are outlined next.

Utility Planning – Predictive Analytics

Renewable Energy Production Prediction

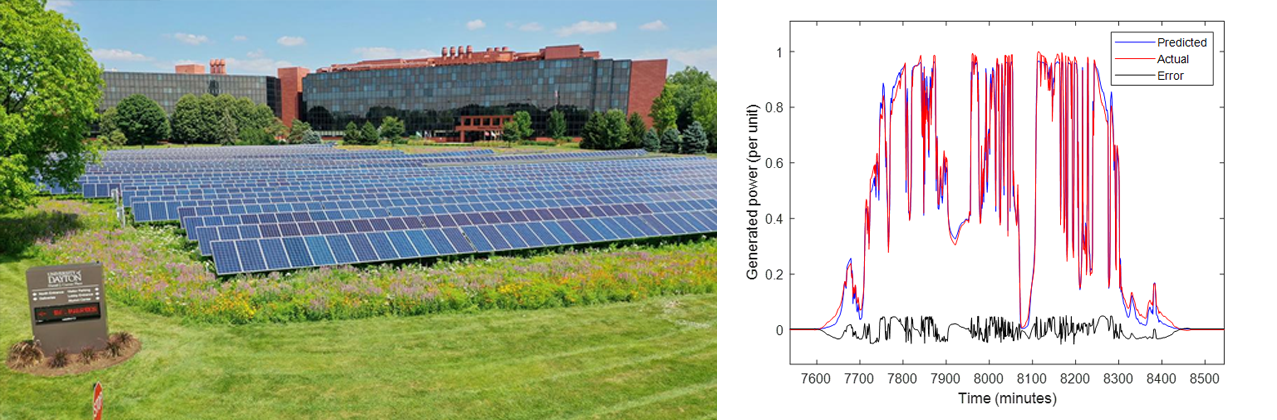

While renewable energy resources like solar and wind power are intermittent, the power production from these assets correlates with the physical dynamics of solar or wind resources. By leveraging the historical weather data or measurement data, it is possible to generate more insight about the varying trends in energy production [10]. Sample results are shown in Figure 4. This prediction can be helpful in the utility’s planning and scheduling tasks.

Figure 4. (Left) Solar Power Installation on UDRI Campus (Source: University of Dayton) and (Right) Results From the ML-Based Model for Solar Power Production Prediction [10].

Load Profile Forecasting

Public utilities typically give highest priority to load centers such as military bases. Load profile prediction is important for energy resource scheduling and planning. Load profiles can be predicted from historical data and operational conditions [21]. One application for load profile prediction is smart load shedding. During grid outage/microgrid isolation events, ML methods could be used to predict future circuit loads and then used for shedding loads from a prioritized list of circuits. A recent NAVFAC EXWC project involves testing and evaluating smart load shedding.

Energy Efficiency Prediction

ML can play an effective role in learning and predicting the fuel consumption of distributed generators or energy efficiency in data centers [22]. It is also important to apply ML-driven control for energy efficiency improvement in electronics; computing; data centers; lighting; heating, venting, and air conditioning; etc.

Facility Management

Energy Management for Buildings or Vehicles

The functions of renewable energy production prediction, load profile forecasting, and energy efficiency prediction also apply to energy management for naval facilities and buildings and various vehicles such as hybrid trucks [23, 24]. The anticipated benefits can be leveraged to produce a reusable, adaptive, real-time energy system optimizer for Navy use that can address the issues of increasing power/energy needs and truly enable energy savings while not sacrificing performance or mission capabilities.

Digital Twin for Test Facilities

The developed capabilities, including the digital engineering tools and model-based systems engineering approaches, can be extended to develop digital twins for naval test facilities [25, 26]. A current EXWC Digital Twin project involves the use of grid-forming inverters with AI computer technology to establish a well-defined and accurate Digital Twin energy system to manage the energy resources.

Predictive Maintenance

Similar techniques can be developed and leveraged to perform predictive maintenance, even considering the operational conditions and life-cycle use of the equipment and resources [27, 28]. The proposed control platform, which is modular and reconfigurable, will greatly reduce installation and maintenance costs and provide expeditionary power when speed, range, agility, and flexibility are critical to mission success.

Microgrid Applications

Military Microgrids

Although a simple microgrid power system was modeled and tested in an HIL environment during a recent NAVFAC effort, the general approach applies to a wide range of military microgrid systems, such as based-wide microgrids [29, 30], expeditionary microgrids, or mobile microgrids [5], as shown in Figure 5.

Figure 5. A Typical Application Scenario of an ML-Driven Energy Optimizer on a Military Base (Source: NAVFAC EXWC).

Microgrid Test Bed

The techniques developed for microgrids can be easily transitioned to a microgrid test bed to facilitate testing activities, particularly with digital engineering tools and a model-based systems engineering approach [31].

Shipboard Power Systems

The developed technologies can be widely applied in shipboard power systems, especially those onboard electrified warships, due to their versatile energy flows and flexible control opportunities. These systems may involve complex energy components, such as prime movers, generators, energy storage, distribution circuits, and sophisticated loads like high-power radar or directed energy, with operational constraints. Optimized operation of propulsion/power systems will reduce the system weight/size and improve the fuel consumption and operation costs of the military systems where power is used [32–34]. AI-driven digital engineering methods and tools can help reduce the development, acquisition, sustainment, or total ownership costs of fielded systems.

Naval Aviation Operational Energy

The Naval Aviation Operational Energy system, where safety, weight, size, maneuverability, and agility are high-priority features, can also benefit from the learning-enabled, model-predictive control scheme. The desired advantages may include predictive optimization in real-time, proactive actions prior to operational changes, and meeting economic, operational, or safety constraints in such systems. The total operation costs can be considerably reduced by optimal dynamic energy reserve, decreasing energy losses, optimizing mission profiles, and benefiting from automated operation [35, 36].

Anticipated Benefits/Recommendations for Future Development

Previous efforts have laid out a systemic framework for energy modeling and developed a suite of ML-driven models and methods for energy prediction and control. The developed solution can significantly improve military capabilities due to enhanced power and energy performances enabled by AI technologies, even based upon existing commercial off-the-shelf power and energy sources only. This solution will have great impact on U.S. Department of Defense (DoD) capabilities because it (1) captures the energy system dynamics, degradation, and uncertainty into the model in a data-driven manner, which would be difficult to capture or otherwise unavailable in the energy system models; (2) provides mechanisms for online continuous model learning/validation; (3) enables fast (real-time) HIL simulation to gain insights into the system behaviors, greatly reducing the design and development time/cost of military energy systems; and (4) empowers an integrated control platform to proactively manage the energy flows among the propulsion, power, and thermal subsystems to achieve higher efficiency and better performance and improve autonomy.

The anticipated advantages of these methods must be validated in realistic application systems and may include energy savings, cost savings, and power quality and resilience improvements [37, 38]. Future efforts are expected to demonstrate prototypes of an AI-driven predictive energy optimizer in improving energy resiliency on military installations and validate their advantages. Specifically, the anticipated objectives of future demonstration and validation efforts may include the following:

- Validate the effectiveness and accuracy of ML algorithms and models for forecasting generator fuel efficiency and load profiles based on operational data.

- Conduct power HIL testing of a prototype AI-driven, predictive optimizer to evaluate the effectiveness of learning-based prediction and model-based predictive optimization functionalities in a realistic microgrid.

- Perform field demonstration at a DoD installation site and validate the performance of the ML-driven predictive optimizer prototype so the technology can be transitioned to the field faster.

As a recommendation for future development, ML can also be used in the test and evaluation stage to (1) screen and downselect test scenarios faster, (2) automatically analyze test data to determine correlations in system parameters or conditions, and (3) generate candidates of best design options. In addition, ML can be leveraged for diagnosis/prognosis and preventive maintenance. All these functions are closely related to digital engineering practice, with tangible benefits to the design, development, and testing of complex engineering systems.

Conclusions

The benefits and advantages of AI and ML can be expanded across the DoD’s power and energy ecosystems. This article has briefly discussed several emerging applications of AI and ML technologies in naval energy autonomy and digital transformation. As these applications are widely transitioned and deployed, the potential impact on operational autonomy will be more clearly understood and realized. While digital engineering tools such as AI and ML techniques improve the effectiveness and resiliency of autonomous systems and workflow efficiency, their impact will be multiplied and amplified when combined with other emerging digital technologies. These may include sensor fusion through universal learning, predictive analytics by deep-learning and data science methods, computational cognitive science, optimization techniques, quantum computing, and other technologies that can enable a deeper understanding of complex, integrated engineering system operations.

Acknowledgment

Funding provided by the NAVFAC EXWC is acknowledged.

References

- U.S. DON. “Department of the Navy Strategy for Renewable Energy.” https://www.secnav.navy.mil/eie/Documents/DoNStrategyforRenewableEnergy.pdf, accessed on 15 May 2023.

- NAVFAC P-602. “3 Pillars of Energy Security (Reliability, Resiliency, & Efficiency).” Washington, DC, June 2017.

- U.S. DON. “Department of the Navy Climate Action 2030.” https://www.navy.mil/Portals/1/Documents/Department%20of%20the%20Navy%20Climate%20Action%202030%20220531.pdf, accessed on 5 June 2023.

- Stamp, J. “The SPIDERS project – Smart Power Infrastructure Demonstration for Energy Reliability and Security at US Military Facilities.” 2012 IEEE PES Innovative Smart Grid Technologies, Washington, DC, 2012.

- U.S. DoD. “DOD Demonstrates Mobile Microgrid Technology.” https://www.defense.gov/News/News-Stories/Article/Article/2677877/dod-demonstrates-mobile-microgrid-technology/, accessed on 5 June 2023.

- Shumway, R. H., and D. S. Stoffer. Time Series Analysis and Its Applications. Third edition, Springer: USA, 2011.

- Karpatne, A., G. Atluri, J. Faghmous, M. Steinbach, A. Banerjee, A. Ganguly, S. Shekhar, N. Samatova, and V. Kumar. “Theory-Guided Data Science: A New Paradigm for Scientific Discovery from Data.” In IEEE Transactions on Knowledge and Data Engineering, vol. 29, no. 10, pp. 2318–2331, 1 October 2017.

- Jiang, Z., and J. Saurine. “Data-Driven, Physics-Guided Learning of Dynamic System Models.” AIAA Science & Technology Forum, January 2023.

- Jiang, Z., and K. Beigh. “Bayesian Learning of Dynamic Physical System Uncertainty.” AIAA Aviation Symposium, June 2022.

- Jiang, Z., and K. Beigh. “Data-Driven Modeling of Dynamic Systems Based on Online Learning.” In AIAA Propulsion and Energy 2021 Forum, p. 3284, 2021.

- LeCun, Y., Y. Bengio, and G. Hinton. “Deep Learning.” Nature, vol. 521, pp. 436–444, https://doi.org/10.1038/nature14539, 2015.

- Pan, J. “Machine Learning Proved Efficient.” Nature Computational Science, vol. 2, p. 619, https://doi.org/10.1038/s43588-022-00344-8, 2022.

- Caro, M., H. Huang, M. Cerezo, et al. “Generalization in Quantum Machine Learning From Few Training Data.” Nature Communications, vol. 13, p. 4919, https://doi.org/10.1038/s41467-022-32550-3, 2022.

- Rudin, C. “Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead.” Nat. Mach. Intell., vol. 1, pp. 206–215, https://doi.org/10.1038/s42256-019-0048-x, 2019.

- Takahashi, Y., M. Ueki, G. Tamiya, et al. “Machine Learning for Effectively Avoiding Overfitting Is a Crucial Strategy for the Genetic Prediction of Polygenic Psychiatric Phenotypes.” Transl. Psychiatry, vol. 10, p. 294, https://doi.org/10.1038/s41398-020-00957-5, 2020.

- Ghahramani Z. “Probabilistic Machine Learning and Artificial Intelligence.” Nature, vol. 521, no. 7553, pp. 452–459, doi: 10.1038/nature14541, 28 May 2015.

- Schramowski, P., W. Stammer, S. Teso, et al. “Making Deep Neural Networks Right for the Right Scientific Reasons by Interacting With Their Explanations.” Nat. Mach. Intell., vol. 2, pp. 476–486, https://doi.org/10.1038/s42256-020-0212-3, 2020.

- Sebastian, A., A. Pannone, S. Subbulakshmi Radhakrishnan, et al. “Gaussian Synapses for Probabilistic Neural Networks.” Nat. Commun., vol. 10, p. 4199, https://doi.org/10.1038/s41467-019-12035-6, 2019.

- U.S. Navy and Marine Corps. “United States Navy and Marine Corps Digital Systems Engineering Transformation Strategy.” https://nps.edu/documents/112507827/0/2020+Dist+A+DON+Digital+Sys+Eng+Transformation+Strategy+2+Jun+2020.pdf/, accessed on 15 May 2023.

- Jiang, Z., and A. Raziei. “Reconfigurable Hardware-Accelerated Model Predictive Controller.” U.S. Patent 10,915,075, filed on 2 October 2017.

- Zhang, L., J. Wen, Y. Li, J. Chen, Y. Ye, Y. Fu, and W. Livingood. “A Review of Machine Learning in Building Load Prediction.” Applied Energy, vol. 285, 1 March 2021.

- Haghshenas, K., A. Pahlevan, M. Zapater, S. Mohammadi, and D. Atienza. “MAGNETIC: Multi-Agent Machine Learning-Based Approach for Energy Efficient Dynamic Consolidation in Data Centers.” In IEEE Transactions on Services Computing, vol. 15, no. 1, pp. 30–44, doi: 10.1109/TSC.2019.2919555, May 2019.

- Ngo, N. T., A. D. Pham, T. T. H. Truong, et al. “Developing a Hybrid Time-Series Artificial Intelligence Model to Forecast Energy Use in Buildings.” Sci. Rep., vol. 12, p. 15775, https://doi.org/10.1038/s41598-022-19935-6, 2022.

- Lee, W., H. Jeoung, D. Park, T. Kim, H. Lee, and N. Kim. “A Real-Time Intelligent Energy Management Strategy for Hybrid Electric Vehicles Using Reinforcement Learning.” In IEEE Access, vol. 9, pp. 72759–72768, doi: 10.1109/ACCESS.2021. 3079903, 2021.

- Lv, Z., Lv. Haibin, and M. Fridenfalk. “Digital Twins in the Marine Industry.” Electronics, vol. 12, no. 9, https://doi.org/10.3390/electronics12092025, 2025.

- Sri Madusanka, N., Y. Fan, S. Yang, and X. Xiang. “Digital Twin in the Maritime Domain: A Review and Emerging Trends.” Journal of Marine Science and Engineering, vol. 11, no. 5, p. 1021, 2023.

- RealClear Defense. “Navy to Deploy New Tech to Prevent Maintenance Problems.” https://www.nationaldefensemagazine.org/articles/2022/3/11/navy-to-deploy-new-tech-to-prevent-maintenance-problems, accessed on 5 June 2023.

- Kimera, D., and F. Nduvu Nangolo. “Predictive Maintenance for Ballast Pumps on Ship Repair Yards via Machine Learning.” Transportation Engineering, vol. 2, p. 100020, 2020.

- Association of Defense Communities. “NAVFAC EXWC Begins Testing Transportable Microgrid Project.” https://defensecommunities.org/2020/11/navfac-exwc-begins-testing-transportable-microgrid-project/, accessed on 5 June 2023.

- Anderson, W., et al. “Growing Energy Resiliency Through Research.” Naval Science and Technology Future Force, vol. 9, no. 1, p. 6, https://www.nre.navy.mil/media/document/future-force-vol-9-no-1-2023, accessed on 5 June 2023.

- U.S. DoD. “Department of Defense Annual Energy Management Report, Fiscal Year 2015.” https://www.acq.osd.mil/eie/downloads/ie/fy%202015%20aemr.pdf, accessed on 5 June 2023.

- Jin, Z., L. Meng, J. M. Guerrero, and R. Han. “Hierarchical and Control Design for a Shipboard Power System With DC Distribution and Energy Storage Aboard Future More-Electric Ships.” IEEE Transactions on Industrial Informatics, vol. 14, no. 2, pp. 709–720, 2018.

- Haseltalab, A., and R. R. Negenborn. “Model Predictive Maneuvering Control and Energy Management for All-Electric Autonomous Ships.” Applied Energy, vol. 251, pp. 113308–113334, 2014.

- Islam, M. M. “Ship Smart System Design (S3D) and Digital Twin.” In VFD Challenges for Shipboard Electrical Power System Design, IEEE, pp. 117–127, doi: 10.1002/9781119463474.ch9, 2019.

- U.S. Naval Aviation Enterprise. “Navy Aviation Vision 2030-2035.” NAVY AVIATION VISION 2030-2035_FNL.PDF (defense.gov), accessed on 5 June 2023.

- Barnhill, D. “Analyzing U.S. Navy F/A-18 Fuel Consumption for Purposes of Energy Conservation.” M.S. thesis, Naval Postgraduate School, https://apps.dtic.mil/sti/trecms/pdf/AD1150419.pdf, accessed on 5 June 2023.

- Li, P., D. Li, D. Vrabie, S. Bengea, and S. Mijanovic. “Experimental Demonstration of Model Predictive Control in a Medium-Sized Commercial Building.” The 3rd International High Performance Buildings Conference at Purdue, 14–17 July 2014, https://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=1152&context=ihpbc, accessed on 5 June 2023.

- Piette, M. A. “Hierarchical Occupancy Responsive Model Predictive Control at Room, Building, and Campus Levels.” DOE/EERE 2017 Building Technologies Office Peer Review, Lawrence Berkeley National Lab, https://www.energy.gov/sites/prod/files/2017/04/f34/3_94150b_Piette_031517-1200.pdf, accessed on 5 June 2023.

Biographies

Zhenhua Jiang is a principal research engineer at UDRI. His research interests include machine learning, autonomy, predictive analytics and control, microgrids, probabilistic modeling, and quantum computing. He has developed a data-driven physics-guided Bayesian neural learning method that is well suited to modeling, controlling, and optimizing various energy systems, autonomous air/space/ground/underwater vehicles, and quantum systems. He has over 100 publications and several patents on subjects such as distribution automation, model predictive control, probabilistic decision engines, and quantum probabilistic solvers. Dr. Jiang holds a Ph.D. in electrical engineering from the University of South Carolina.

Scott C. Miller is an electrical engineer and project manager at the Energy Management Division of the NAVFAC Engineering and Expeditionary Warfare Center. His research, development, test, and evaluation interests include artificial intelligence and machine learning, data analytics and analysis, microgrids, utility and energy modeling, and cybersecurity communications. Mr. Miller holds an M.S. in electrical engineering from California State University, Northridge, California, and is a Professional Engineer (PE) of electrical engineering.

David Dunn is the Power and Energy Division head at the University of Dayton Research Institute, where he oversees hundreds of power generation, storage, and use research projects for customers across the DoD, Department of Energy, and the National Aeronautics and Space Administration as well as commercial partners looking to improve their product efficiencies and innovation. He is a retired U.S. Air Force (USAF) officer and USAF Test Pilot School graduate. Mr. Dunn holds an M.S. in electrical engineering from the Air Force Institute of Technology and a B.S. in electrical engineering from the U.S. Air Force Academy.