INTRODUCTION

In the war of words, artificial intelligence (AI) and machine learning (ML) currently rank among the top listed. This is due to the obvious potential impact these technologies have on almost every market sector. Based on a review of multiple predictive reports [1] and with an estimating growing market cap between $5 billion to $8 trillion by 2020, it is imperative to ask how these technologies are applied and can they continue to be utilized to optimize our armament systems. The purpose of this article is to give a high-level review of how AI and ML can optimize weapon platforms. This includes a base discussion on the benefits and concerns of having AI and ML become a part of our integrated weapon and fire control systems. The importance of how our Warfighter is “in-the-loop” will always be a talking point, particularly as AI and ML continue to move closer to reaching singularity. For this reason, some discussion will focus on identifying levels of autonomy for armaments systems to ensure control is never compromised. AI and ML are not new concepts for the Army to adopt for their armament systems. Utilizing fire control platforms that provide multiple examples of AI and ML already in the field or being developed will be included. Finally, ethical and moral issues with AI and ML utilized for weapon platforms should be addressed.

BENEFITS

The ability to process information quickly and effectively stands as one of the fundamental benefits of AI and ML, as it has been proven for computer systems. There are increasing advantages as the deep learning and complexity of the information increases. AI and ML have the potential to allow processing information at a higher volume and speed than possible for our Warfighters. The ability to identify patterns advantageous to the Warfighter also lie within this processing. This has multiple benefits, not only in accuracy and speed, but also in lightening the cognitive load on our Warfighter. Allowing the Warfighter to focus and process a more complex strategy and real-time decision making is critical during an operation. If utilized properly, using AI and ML can also aid in reducing errors that occur due to the often stress-dependent human element.

SINGULARITY AND CONCERNS

The point of singularity is described as the convergence of human intelligence and machine intelligence; the computer and its software will have reached the same level and pattern of recognition and awareness that our species enjoy. Arguments as to whether this will ever be possible continue. Nevertheless, based on world gross domestic product (GDP) and processing power (i.e., budget and how many transistors can troubleshoot), Ray Kurzweil asserts that we will reach singularity in the 2050-2060 timeframe based on this model [2].

AI and ML have the potential to allow processing information at a higher volume and speed than possible for our Warfighters.

This will create a new species on the planet. Linked into armaments systems, this opens the imagination to many different possibilities that have been played out throughout many sci-fi movies and has built a base of distrust for this type of development. On a positive note, humans have always won in these scenarios, but reality may not be so kind. A result of Ray Kurzweil’s assertion was an open letter to the United Nations signed by many of the greatest thinkers and leaders who want to ensure humanity’s growth continues by asking for a ban on developing “killer robots” or Lethal Autonomous Weapon Systems (LAWS) [3]. This directly links into the armaments community who will need the proper safeguards in place while still utilizing the benefits of AI within armament solutions by controlling the levels of autonomy as machines are weaponized. A fundamental dilemma is the possibility that other “actors” will develop these types of capabilities and put us at a disadvantage. In other words, the rules of engagement will be forced to shift as these types of systems are deployed. Therefore, any leading military must develop platforms with these capabilities and have them ready for use if an adversary begins to deploy them to ensure an overmatch is maintained.

This does not fully elevate the operational and ethical concerns. Further discussions need to occur to fully understand the ethical ramifications of developing fully autonomous weapon platforms that integrate high AI and ML capabilities. Schroeder’s research in the ethics of war as it relates to autonomous weapons systems identifies multiple fundamental considerations that need to be addressed as these types of systems are developed [4]. One major concern is that designers will not be able to 100% predict how these systems will operate, opening the possibility for misconduct on the battlefield by LAWS. This links directly to the 2001 Responsibility of States for International Wrongful Acts published by the International Laws Commission that identifies states operating LAWS-based systems would be responsible for the acts of these systems when employed with their forces [5]. Some have argued that autonomous systems will not perform acts out of fear or aggression and would have video evidence to back up their actions [4, 6].

It is difficult to predict how the law and ethics for a LAWS-based system will be established and upheld, as the systems do not yet fully exist. Using weaponized platforms such as LAWS will alter the way battles will be fought.

The use of these systems may get more complex when dealing with different cultures’ opinions on the importance or placement of robotic platforms within society. Some cultures value robotic systems in their culture more than others. As singularity is achieved at a humanitarian level, it can be argued that humans have created a new species on the planet that deserves the same rights and protections as themselves and other species. As the platforms get closer to singularity [7], there is debate on human rights vs. robot rights. Sending a fully weaponized robot that is “aware” or reached singularity but has not chosen to fight on its own might be conceived as another form of forced labor or slavery. Fortunately, there will be a few years before this point is reached to allow in-depth discussions and debate. Understanding and classifying the levels of autonomy for weaponized platforms are part of this discussion.

LEVELS OF AUTONOMY

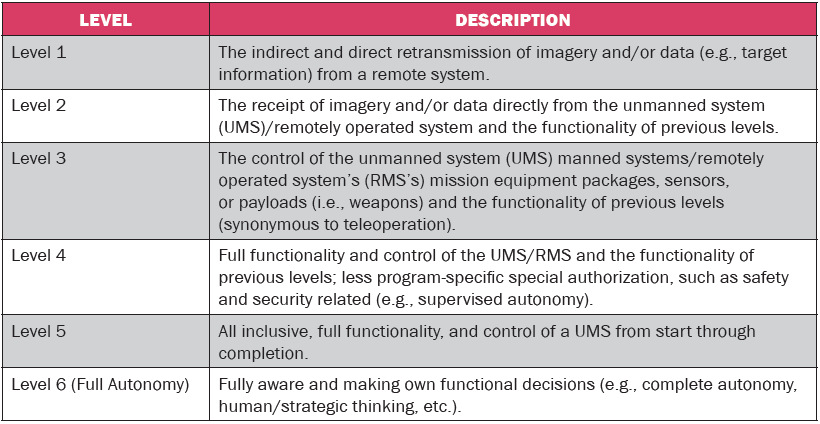

These levels of autonomy establish clear stages of how AI, ML, and robotic platforms are controlled and supervised based on their roles and scenarios. The U.S. Army’s Armaments, Research, Development and Engineering Center

(ARDEC) has internally developed a draft set of autonomy levels for weaponized platforms to track and make development decisions [8]. Because of the strong systems engineering practices utilized by ARDEC, understanding each of these levels and how they interact with all stakeholders is critical. The draft levels are illustrated in Table 1.

Sending a fully weaponized robot that is “aware” or reached singularity but has not chosen to fight on its own might be conceived as another form of forced labor or slavery.

Applications that could impact the Warfighter’s armaments capability can be identified at all levels. Understanding these levels is critical so we ensure control is maintained throughout each level of autonomy. Countermeasures for these capabilities also need developed as adversaries develop weapon platforms within these outlined levels. Questions remain on how AI and ML are currently utilized within existing armament systems, as armaments consist of three basic subsystems- the weapon, munition, and fire control.

Table 1: Proposed Levels of Autonomy Armament Systems

FIRE CONTROL APPLICATIONS

Modern fire control is typically digitized in nature, making it an obvious choice to utilize AI and ML for this subsystem. AI and ML have great impact and future potential to support fire control and its kill chain. This is not surprising because the computer was invented for and first used to calculate fire control ballistic trajectories of artillery shells in the 1940s [9]. To see the potential utilization within fire control, the fire control kill chain [10] that is the doctrinal base for most armament systems must first be reviewed. This kill chain is broken into six stages, as shown in Figure 1.

Moving through the chain, one can see that the target is identified and tracked and means of engagement identified. Once this is accomplished, the weapon platform is aimed and fired. From that point, the munition is tracked and/or guided to the target, which is then assessed for further action. As mentioned before, the very first computer program was used to calculate how the munition would fly, allowing the artillery gun to be pointed in the correct direction. This capability to exceed human calculations continues to be a theme within fire control and may be considered a low-level AI capability.

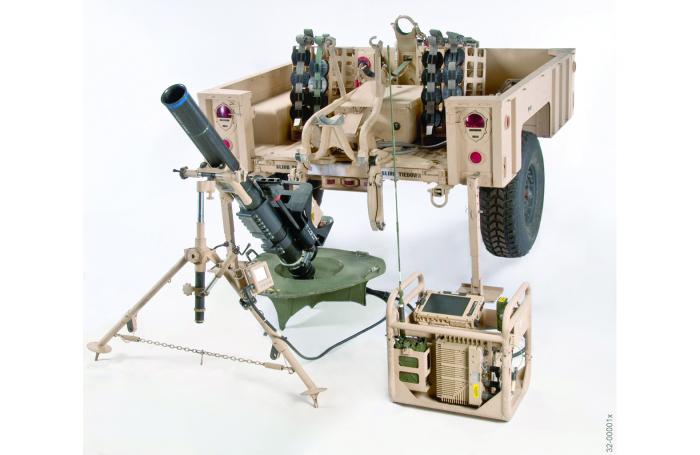

This leads to a currently fielded system that provides ballistic calculations for mortar platforms. The Mortar Fire Control Systems (MFCS), utilized for 120-, 81-, and 60-mm mortar platforms, is currently fielded on multiple devices depending on the platforms (Figure 2). Within this software, the ballistic calculations are developed based on multiple variables that affect the projectile during the armaments operation. The software algorithm adjusts the weapon by using distance, wind, air pressure, and other factors such as propellant temperature. This is like how a human archer would adjust the bow before releasing an arrow. Today, some may not consider this AI compared to the early definitions put forth by John McCarthy in 1956—“the science and engineering of making intelligent machines” [11]. One can see how this was an early adoption of AI principles for armament systems.

As image processing is currently a large focus area within Al development, it is easy to link to the first step in the fire control kill chain—”identify and track” the potential target. Many systems have been developed that will classify and track objects within a visual area.

The capability to exceed human calculations continues to be a theme within fire control and may be considered a low-level AI capability.

Orientation and pointing continues to be one of the most difficult areas to develop solutions within the fire control kill chain. Currently, laser ring or fiber optic gyros are the go-to solutions for identifying north within the accuracy needed to ensure successful weapon pointing. The issue with this type of technology is directly linked to SWaP (size, weight, and power) and cost. Typical laser ring gyros capable of 1-mil accuracy are approximately ~16 lbs, at least ~5x7x8.5”, and cost between $50 and $100 K, depending on volume being purchased. This size and cost does not make it applicable to smaller caliber weapon platforms such as the 60- and 81-mm mortar system previously referenced. To overcome this, the Weaponized Universal Lightweight Fire (WULF) control system (Figure 3) was developed.

WULF is a solution that has been transitioned to PEO Ammo for fielding in 2022. This system utilizes multiple low-cost, miniature electrical mechanical switch-based sensors (such as accelerometers, magnetometers, and inclinometers) in conjunction with a camera to give pointing solutions for weapon systems. Using multiple sensors integrated together gives an advantage. By understanding the strengths and weaknesses of each of these sensors, a robust solution was developed. The WULF system can point at a ~3-mil accuracy at a cost of ~$10 K and weight of ~1.8 lbs. The way this relates to AI and ML is that the integrated sensors have more than 130 variables or sources of errors that could influence the accuracy of the WULF pointing device. These variables include variances in the weapon platform connected to variations in orientation of sensor chips when soldered to the base circuit board. To optimize the system, an ML algorithm was adopted that considers all 130+ variables and allows the fire control sensor to teach itself how to be more accurate based on the situation it is operating in. This resulted in an increased accuracy from ~3 mils to ~2 mils. Within the orientation and pointing community, this is considered a significant accomplishment.

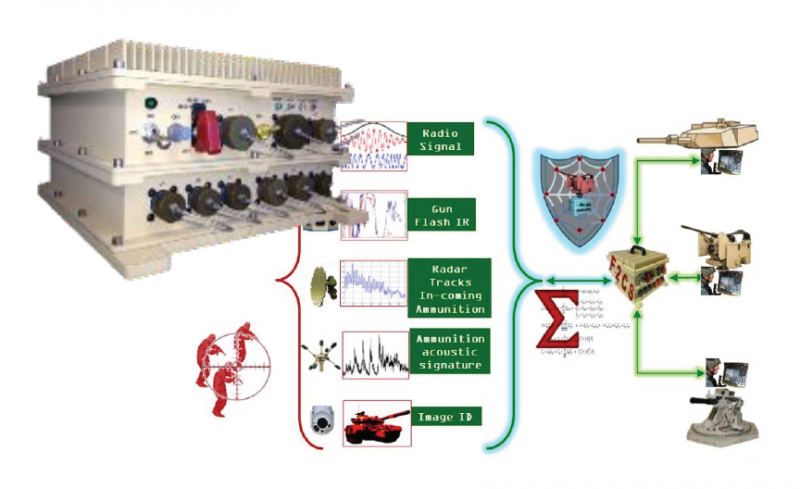

The fourth example directly links to the ability for algorithms to process and make complex decisions faster and more accurately than humans. The Flexible Fire Control System (F2CS) (Figure 4) was developed to allow a full integrated fire control solution that can adapt to incoming threats. The F2CS system utilizes an AI algorithm to link existing threat detection systems to armaments systems that can defeat an incoming threat. This is accomplished by integrating multiple threat detection systems into the AI algorithm. If a threat is detected and classified, it utilizes the database of connected weapon platforms, selects the best weapon or weapons to defeat the target, and slews/aims the weapon(s) to engage. In less than a second, this is presented to the Warfighter for engagement approval. Without this AI algorithm, the processing/decision-making time may not allow the threat defeat. The importance of understanding the levels of autonomy discussed before is illustrated in this example. As the fire control system goes through the kill chain process to identify, classify, slew, and engage the target, time is critical. Due to these time limitations, the only way to ensure Warfighter survivability and defeat the threat may be to build in this type of autonomy. An example methodology for this is to have preapproved engagement air space to expand if an incoming threat enters a preselected area around a base. The fire control will have authorization to engage that object.

These last four examples are all organic Army-developed programs. However, we are not the only countries developing armament systems with AI and ML capabilities. As an example, the SGR-A1 [12] is an autonomously firing weapon platform capable of executing all stages of the fire control kill chain. Developed to maintain/monitor the demilitarized zone between North and South Korea, the SRG-A1 is an example of level 5/6 of weaponized autonomy with AI systems (Figure 5). If activated, it is given full authority to execute the kill chain autonomously on any object that moves within the space, whether that is an armored vehicle or a squirrel. This naturally pivots the mind toward the ethical considerations and levels of trust with armament systems that utilize AI/ML and have high autonomy levels. These considerations come full circle in trying to understand the ethical considerations of these types of systems and how our War Fighters will trust and operate with these platforms.

![Figure 5: SRG-A1 Autonomous Weapon (Source: SGR-A1 [12]).](/wp-content/uploads/2019/11/dsiacjournal_fall2018_tillinghast_fig5.jpg)

TRUST

These efforts are a method to support our Warfighters and ensure that they continue to have an overmatch during operations. As AI-based systems are further integrated into our platforms, the interface between humans and machines becomes more critical. One question is the level of trust that will be established between these platforms and our Warfighter. The initial inclination is our Warfighter will distrust system; however, research by the Georgia Institute of Technology has found that humans overtrust robots in some situations [13]. The bond between AI-based systems and the Warfighter will need to be further explored and understood to make sure decisions in real-time, high-stress situations are not compromised. Research has been conducted in this area on EOD operators and their field robots [14]. Patterns were identified, but further research is needed to fully understand how trust will play in the operational environment.

CONCLUSION

Overall, the benefits and advantages of AI and ML are already being capitalized within our armaments systems, as shown by the examples provided. These benefits are just a small sampling focused on weapon platforms. As these technologies are expanded across the entire U.S. Department of Defense logistical footprint, it is easy to see the potential impact. The balance that must be maintained is the control over the technology, in both development and use. AI and ML will no doubt increase the effectiveness of weapon platforms. This impact is multiplied when combined with other emerging technologies, including sensor saturation through the Internet of Things, massive data analytics through deep learning and big data methods, modern cognitive science, social network technologies, and other technologies that allow a wider and deeper understanding of how integrated complex systems operate. These advancements still must navigate through the growing ethical concerns surrounding these systems and align with and adopt to the Law of War (legal) obstacles that still exist.

References:

- Faggella, D. “Valuing the Artificial Intelligence Market, Graphs and Predictions,” March 2018, www. TechEmergance.com, accessed 1 June 2018.

- Kurzweil, R. “Timing the Singularity.” The Futurist, http://www.singularity2050.com/2009/08/timing-thesingularity.html, accessed 1 June 2018.

- Sample, I. (editor). “Ban on Killer Robots Urgently Needed, Say Scientists.” The Guardian, https://www.theguardian.com/science/2017/nov/13/ban-on-killer-robotsurgently-needed-say-scientists, accessed 1 June 2018.

- Schroeder, T. W. “Policies on the Employment of Lethal Autonomous Weapon Systems in Future Conflicts,” 2016.

- United Nations. “Responsibility of States for International Wrongful Acts 2001.” International Law Commission, Articles 30–31, pp. 34-39, 2008.

- Lubell, N. “Robot Warriors: Technology and the Regulation of War.” TEDxUniversityofEssex, YouTube.com 13:44, 2014.

- Robertson, J. “Human Rights vs. Robot Rights: Forecasts From Japan.” Critical Asian Studies 46.4, pp. 571–598, 2014.

- Tillinghast, R., et al. “Guns, Bullets, Fire Control and AI.” NDIA, Armaments Systems Forum, 2018.

- Computer History Museum. “ENIAC.” http://www.computerhistory.org/revolution/birth-of-the-computer/4/78, accessed 1 June 2018.

- Tillinghast, R., et al. “Fire Control 101.” National Fire Control Symposium, 2017.

- McCarthy, J. “Artificial Intelligence.” Science Daily, https://www.sciencedaily.com/terms/artificial_intelligence.htm, accessed 13 April 2018.

- SGR-A1. www.wikipedia.org/wiki/SRG-A1, accessed 2 June 2018.

- Robinette, P., et al. “Overtrust of Robots in Emergency Evacuation Scenarios.” Human-Robot Interaction (HRI), 11th ACM/IEEE International Conference, 2016.

- Carpenter, J. “The Quiet Professional: An Investigation of US Military Explosive Ordnance Disposal Personnel Interactions